Drift of a clock using floating-point numbers

Simulation of a clock couting time by increments of 0.1 seconds. Analysis of the drift over time due to the rounding errors in underlying floating-point computations.

Authors: Claude Marché / Ali Ayad

Topics: Floating-Point Computations

Tools: Frama-C / Jessie / Gappa

see also the index (by topic, by tool, by reference, by year)

Description

We consider the following C code which increments a counter by steps of 0.1.

float clock_single(int n) {

float t = 0.0f;

int i;

for(i=0;i<n;i++) t = t + 0.1f;

return t;

}

This is indeed a classical example taken to illustrate rounding errors, coming from the fact that 0.1 is not exactly representable. It is also related to a known bug which arose in the critical software of a patriot missile battery. (An internal clock was incremented by steps of 0.1 seconds, and because of rounding errors occuring over time, the drift between that internal clock and the real time prevented the anti-missile to launch, thus causing the death of several soldiers during the Gulf War in 1991. See http://autarkaw.wordpress.com/2008/06/02/round-off-errors-and-the-patriot-missile/ and http://en.wikipedia.org/wiki/MIM-104_Patriot#Failure_at_Dhahran.)

Analysis

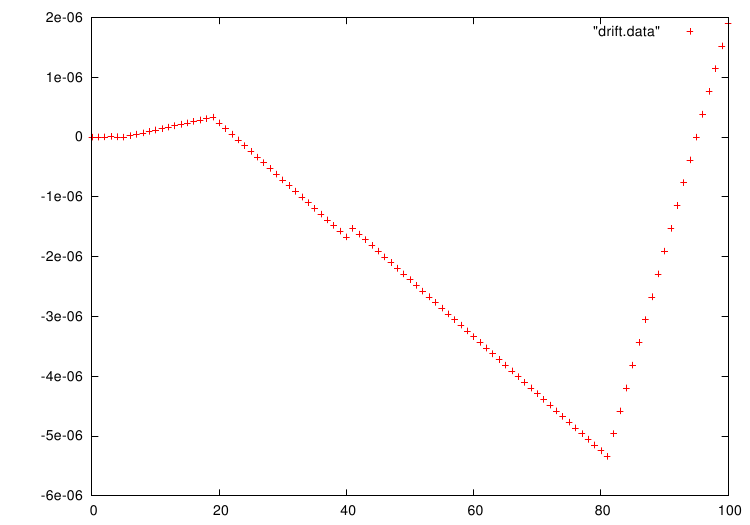

In a mathematical model of that program, t=i× 0.1 is true at each iteration of the loop. But the rounding of 0.1 in single precision is 0x0.199999Ap0 ≃ 0.1 + 1.5e−9. Naively, one could expect i× 0.1 ≤ t ≤ i× (0.1 + 1.5e−9) to be a loop invariant in this C program. However, the behavior of floating-point computations is much more complicated, because when t gets higher, the rounding error when computing t+0.1f can become either positive or negative, and gets higher in absolute value: the graph below shows the values of t − i× 0.1 for the first 100 iterations of the loop.

What we can prove on such a program is then an invariant of the form |t − i× 0.1| ≤ i× C for some constant C to determine. The problem is that the constant C depends on how many iterations we are going to make. Following the analogy with the clock of the missile battery, we assume that it will not run for more than one day (as for the analogy with the missile battery bug: indeed the clock had run for 4 days, although it was not supposed to), so we are going to bound the number n of iterations by one million.

Proof

The fully annotated code is given below.

The lemmas are given to help the automatic provers: the lemma

real_of_int_inf_NMAX is needed by Gappa to prove the

assertion on the rounding error. The lemma real_of_int_succ

helps the SMT solvers to prove preservation of the loop

invariant. A is a bound of (float)0.1 − 0.1, whereas B is

a bound of round_error(t+(float)0.1) for 0 ≤ t ≤ 0.1

× NMAX.

Running this code into our tool chain results in 17 VCs. The first two correspond to the two lemmas: the first one is proved by Gappa, whereas the second is proved by all SMT solvers and Gappa. 5 VCs come from runtime error checks: 1 for the overflow in t+0.1, proved by both SMT solvers and Gappa, and 4 others from integer overflow checks and loop termination checks, proved by SMT solvers. The remaining 10 VCs come from the behavioral properties of the C function: 3 to check that loop invariants initially hold (proved by SMT solvers and Gappa), 2 for the first assertion (proved by SMT solvers), 1 for the second assertion (proved by Gappa only), 3 to check that the loop invariants are preserved by any loop iteration (proved by SMT solvers but not Gappa), and finally the postcondition, proved by SMT solvers. In all and thanks to the lemmas, everything is proved with automatic provers only.

The constant B was determined using Gappa. Here are other values of B in function of NMAX, together with the corresponding approximate value of NMAX×C, that is the bound we obtain on the error at the end of iteration.

| NMAX | 103 | 104 | 105 | 106 | 107 |

| B | 2−18 | 2−15 | 2−11 | 2−8 | 2−4 |

| NMAX×C | 0.004 | 0.3 | 48.8 | 3906 | 625000 |

In other words, it is proved that the drift after a bit more than one day is not larger than 4,000 seconds. This is a lot, but it is of course only an upper bound, the real error is much smaller because rounding errors compensate. (To conclude the story of the missile battery bug: indeed fixed-point computations where in use there, which make the rounding errors even larger and do not compensate. The lesson to learn is that it would be much better to use a representable number for the tick interval: 1/8 or 1/16 for example.)

References

- [1]

- Ali Ayad and Claude Marché. Multi-prover verification of floating-point programs. In Jürgen Giesl and Reiner Hähnle, editors, Fifth International Joint Conference on Automated Reasoning, Lecture Notes in Artificial Intelligence, Edinburgh, Scotland, July 2010. Springer.

- [2]

- Sylvie Boldo and Claude Marché. Formal verification of numerical programs: from C annotated programs to mechanical proofs. Mathematics in Computer Science, 2011.

#define NMAX 1000000

#define NMAXR 1000000.0

/*@ lemma real_of_int_inf_NMAX:

@ \forall integer i; i <= NMAX ==> i <= NMAXR; */

//@ lemma real_of_int_succ: \forall integer n; n+1 == n + 1.0;

#define A 1.49012e-09

#define B 0x1p-8

#define C (B + A)

/*@ requires 0 <= n <= NMAX;

@ ensures \abs(\result - n*0.1) <= n * C; */

float clock_single(int n) {

float t = 0.0f;

int i;

/*@ loop invariant 0 <= i <= n;

@ loop invariant \abs(t - i * 0.1) <= i * C ;

@ loop variant n-i;

@*/

for(i=0;i<n;i++) {

L: //@ assert 0.0 <= t <= NMAXR*(0.1+C) ;

t = t + 0.1f;

//@ assert \abs(t - (\at(t,L) + (float)0.1)) <= B;

}

return t;

}